3 cost considerations for your multi-cloud environment

By Alex Hawkes|30 May, 2022

A multi-cloud strategy has become the status quo for many organisations. It provides access to the broadest range of cloud services and best-of-breed technologies, along with a reduced risk of vendor lock-in.

As a result, most organisations will use a number of the most popular service options alongside more niche or localised offerings.

This means the big name hyperscalers will continue to retain a significant share of wallet in enterprise IT, with research house Gartner predicting “the 10 biggest public cloud providers will command, at a minimum, half of the total public cloud market until at least 2023”.

To some degree this means enterprises will benefit from price competition, but without a proper strategy and oversight in place, multi-cloud deployments can also introduce extra costs that are not present in single-cloud environments.

The increased complexity of a multi-cloud environment

Different clouds provide different benefits but the key to getting maximum value from multiple cloud largely comes down to how effectively a company is able to manage an increasingly complex application portfolio and infrastructure.

The affordability of public cloud means organisations can easily create and store large amounts of data, but that data often needs to move between different locations or different clouds and costs can start mounting up based on the number of times an application component or data set crosses a cloud platform boundary.

1. Data storage costs

Creating data does not automatically create value from that data. Data has to be stored, processed, analysed, and interpreted. One of the pitfalls being that it’s now so easy to create data, companies end up with large amounts of ‘dark data’ that is untapped or unused.

Creating data does not automatically create value from that data. Data has to be stored, processed, analysed, and interpreted. One of the pitfalls being that it’s now so easy to create data, companies end up with large amounts of ‘dark data’ that is untapped or unused.

With a data ecosystem that is more complex than ever, capturing and storing lots of data without a strategy for extracting value from it, can result in having information scattered and siloed across multiple systems, clouds, and infrastructures at a detrimental cost to the business.

An application may run in AWS generating data sourced from multiple IoT (Internet-of-Things) endpoints, for example. That data may then be moved to a Google Cloud Platform instance where it is used to train machine learning models.

In this multi-cloud scenario, the organisation would have to consider:

- Whether the original raw data is stored on both AWS and Google Cloud, or whether it is removed from AWS once it passes to the Google system.

- What the data lifecycle management practice is across all clouds in the business.

- The network configurations, bandwidth and latency requirements to and from each cloud.

- Security measures to protect data resting in each cloud, as well as data in transit.

A lot of these considerations have inter-dependencies. Latency may be one of them - perhaps when sending the data from the AWS instance to the Google Cloud instance. Or perhaps the organisation’s users need low-latency access to the data within the Google instance to derive real-time value from it. Access to data and apps stored at different and sometimes distant locations across the cloud network is not instantaneous.

With a multi-cloud infrastructure, the data centre closest to end-users can be accessed at the lowest latency with minimum server hops, but the infrastructure providing access to those instances, and indeed, how data is transferred between instances, is important and can have a significant impact on cost.

This is particularly relevant in the age of the globalised workforce, with pockets of workers in multiple locations rather than concentrated in offices.

Perhaps older data that is no longer relevant for machine learning or is not frequently accessed can be moved to a lower-cost storage service back on AWS or a different platform.

Data lifecycle management policies can automatically move data from high-cost, low-latency storage systems to lower-cost storage that provides long-term backup. Another consideration is whether copies of data in one cloud storage service is sufficient or whether redundancy is important enough that additional copies will be necessary in multiple clouds.

2. The cost of underutilised resources

While the pay-as-you-use-it, consumption-based pricing of the public cloud has the potential to be more cost-effective than on-premises infrastructure, enterprises that fail to properly monitor their cloud environments can see costs spiralling out of control.

While the pay-as-you-use-it, consumption-based pricing of the public cloud has the potential to be more cost-effective than on-premises infrastructure, enterprises that fail to properly monitor their cloud environments can see costs spiralling out of control.

One of the key challenges here is the ‘multi-cloud by stealth’ phenomenon that arises as individual functions within the business procure and consume cloud services for their own purposes.

Many organisations allow functions outside of ‘IT’ to procure and manage their own cloud environments, leading to significant gaps in oversight. The biggest challenges here are of groups buying the wrong environment for their requirements, services that are over-provisioned, licences that go unused, and cloud servers or capabilities that may be unnecessarily duplicated.

By some estimations, as much as one-third of cloud spending is wasted because it is difficult to determine not only where expenditures are going, but also what value, if any, the organisation is getting.

To avoid falling into this trap, enterprises need to be able to identify underutilised resources they are still paying for, possibly through the use of automated cloud management tools that perform regular analysis and optimisation of cloud services.

3. The cost of cloud connectivity

Uptime is critical for many applications, but even a 99.9% service availability level means an average of nine hours of downtime per year, which is one of the attractions of a multi-cloud architecture in that it can increase uptime and availability by spreading the risk.

But an effective multi-cloud redundancy strategy requires an effective cloud connectivity strategy, as there’s no point having fast failover to a different environment if access to that environment is hobbled by unreliable connectivity.

Connectivity is also an important component of the data storage and lifecycle management process, as well as getting the most value out of your resources. Again, there’s no point setting up a real-time compute instance if you can’t get data into or out of the instance in a low-latency manner - your connectivity becomes the bottleneck.

Organisations need to understand the core connectivity between these disparate environments.

In some cases this will mean a modification or upgrade of your WAN, as legacy WAN architectures are typically very cloud unfriendly and put considerable burden on the organisation to maintain. Appropriate connectivity for SaaS, IaaS, and PaaS is not easily achieved on traditional infrastructure and a suboptimal WAN can actually degrade the performance of cloud-based applications and adversely affect the end-user experience.

But if done well, using multiple clouds can help avoid vendor lock-in and reduce dependency on a single provider, even leveraging the competitive tension between the different cloud environments to reduce cost and boost service levels.

But if done well, using multiple clouds can help avoid vendor lock-in and reduce dependency on a single provider, even leveraging the competitive tension between the different cloud environments to reduce cost and boost service levels.

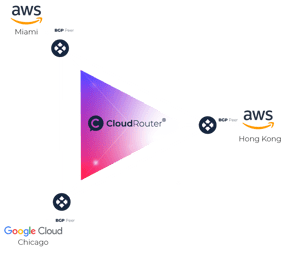

Console Connect’s CloudRouter® provides a way to securely and cost-effectively interconnect multiple cloud providers or regions by providing private Layer 3 connections between cloud providers.

Furthermore, the fact that Console Connect’s CloudRouter® operates on a mesh network reinforces the potential for disaster recovery in a multi-cloud environment versus similar offerings from other providers.

.jpg)

.jpg)